OCI regions are grouped together into a realm, which is essentially a logical collection of regions. Realms are completely isolated from each other, meaning they do not share any data. However, regions within a realm can share data through replication. It’s worth noting that regions belonging to different realms are entirely independent of each other.

Tenancies

OCI users are organized in a tenancy, which is a logical grouping for business customers. This tenancy contains users, groups, and compartments. A tenancy is based in a home region but can also be subscribed to other regions. When a tenancy is subscribed to another region, the data created in the home region is automatically replicated to the subscribed region. This replication of data is necessary to access services in that region. It is important to note that identity data can only be modified in the tenant’s home region.

Compartments

Compartments serve as logical containers for resources, and they usually have ancy that regulates access to the resources inside. Compartments can also be nested, allowing for greater organization and management of resources. Additionally, compartments can span regions, enabling compute instances from different regions to be added to the same compartment and governed by a single policy. associated poli

Availability Domain

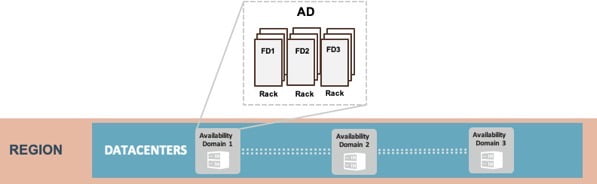

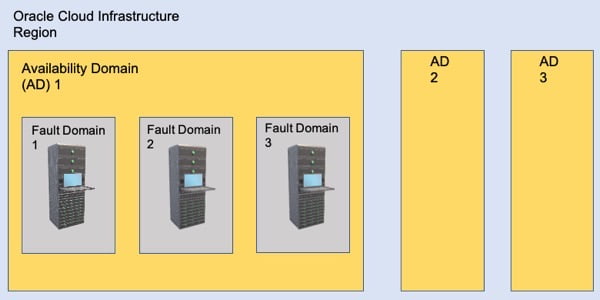

The following diagram describes AD, a physical data center that is independent in case of a failure and has low latency connectivity. Consumers are given the freedom to choose the AD in which they want to set up their resources, such as databases and compute instances. To ensure equal utilization of AD, the selection process is randomly mapped to a physical AD. A screenshot is provided to show a logical representation of how ADs are mapped in a region and how that maps to a fault domain in it.

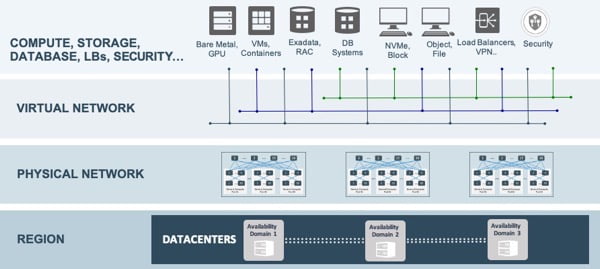

The AD in OCI operates a network that is both highly scalable and high-performance. The design of this network ensures that there is no noisy neighbor problem as it is not oversubscribed. The AD can scale up to approximately 1 million ports, and the non-oversubscription network with no noisy neighbors allows for predictable low latency and high-speed interconnectivity between hosts that don’t traverse more than two hops. The logical diagram below maps out how the physical network infrastructure connects to the regions.

Oracle Cloud Infrastructure (OCI) initially launched four regions, namely Phoenix, Ashburn, London, and Frankfurt. Each region comprises three Availability Domains (ADs), located in distinct data centers.

At the outset, Oracle created foundational services that were bound to a single AD to avoid any dependencies outside that AD. For instance, the compute service was designed in this manner.

However, Oracle revised its approach after the first four regions. They realized that most customers don’t use ADs for high availability; instead, they rely on high availability within a single AD and use multiple regions for disaster recovery. To accommodate this shift in strategy, OCI launched more single-AD regions.

Off-box virtualization

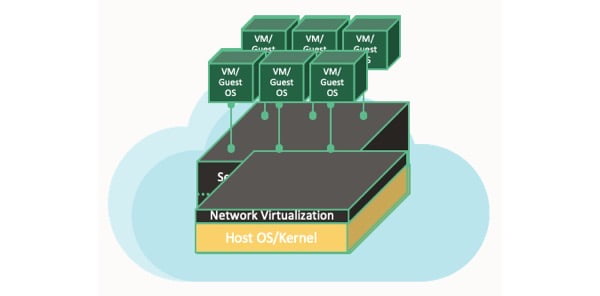

If we take a look at any traditional cloud provider, we can see that they are all made up of Virtual Machines (VMs) that run on top of a hypervisor. The hypervisor’s main job is to isolate these VMs by sharing the same CPUs and then capture I/O from each VM to ensure that they are abstracted from the hardware. This makes the VM secure and portable as a software-defined network interface card. Additionally, the hypervisor is able to inspect all of the packets that go between the VMs and enable features such as IP whitelists and access control lists. The following diagram shows how this works:

When using in-kernel virtualization, the host hypervisor can become overloaded when inspecting packets to and from a virtual machine, as it is responsible for packet switching, encapsulation, and enforcing stateful firewall rules. Additionally, there is a risk of noisy neighbors, where a VM monopolizes bandwidth, disk I/O, and CPUs, causing issues for other VMs.

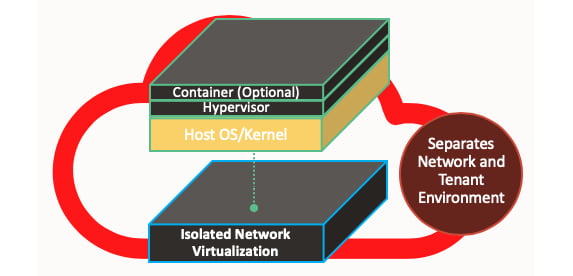

Off-box virtualization is different because it does not perform I/O virtualization within the hypervisor. Instead, it uses the network outside of the physical box. The control plane that runs the virtual network cannot be accessed from the public internet, but it is possible to create an explicit tunnel to reach the virtual network for monitoring, auditing, and emergency shutdowns. The diagram below illustrates that network I/O virtualization is not performed at the host hypervisor level.

Moving from in-kernel virtualization to off-box virtualization can result in a significant improvement in performance and security. This is because there is no longer any performance overhead associated with the hypervisor. Additionally, off-box virtualization provides the flexibility to connect any device to the virtual network, including bare metal hosts, NVMe storage systems, VMs, containers, and engineered systems like Exadata. All of these devices can operate on the same virtual networkfer to the diagram below for a visual representation. and communicate with each other within just two hops. Please refer to the diagram below for a visual representation.

OCI offers a unique service of bare metal servers that don’t come with any pre-installed operating system or software. This increases security levels compared to traditional virtualization. You can install any hypervisor you want on top of the OCI bare metal instance and then deploy VMs and install applications on it. OCI doesn’t provide access to the memory space of these bare metal instances, which ensures complete physical isolation.

These bare metal instances are not shared with any co-tenants, which results in increased IOPS and bandwidth.

The security benefits of off-box virtualization

Server virtualization is a process that involves an abstraction layer between the application running on the virtualized server and the hardware resources such as compute, storage, and networking. This virtual infrastructure can be deployed without any disruption to the user experience. To take advantage of partitioning, isolation, and hardware independence, virtualization of the CPU, main memory, network access, and I/O is necessary.

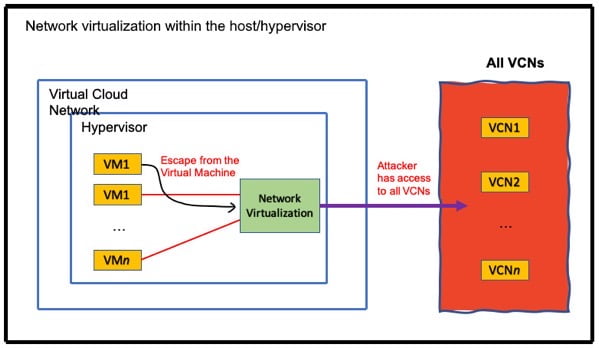

In first-generation public cloud infrastructures, traditional server virtualization may come at a cost of performance overhead and weaker security. The hypervisor needs to manage network traffic and I/O for all virtual machines running on a host, leading to noisy neighbor problems and decreased performance. Additionally, the security can be weaker because the hypervisor has complete trust and makes access decisions on behalf of the virtual machine. This means that if the hypervisor is compromised, the attacker can easily spread beyond a single hypervisor to other systems in the same cloud, potentially accessing any Virtual Cloud Network. The diagram below shows an example of in-kernel network virtualization.

The security isolation between the compute resources of different customers is of utmost importance. Oracle Cloud Infrastructure (OCI) has designed its security architecture with the assumption that customer-controlled compute resources can be harmful. OCI has a multi-layered defense security architecture that aims to reduce the security risks of traditional virtualization.

OCI uses off-box network virtualization, which removes network virtualization from the software stack (hypervisor) and places it in the infrastructure. This approach eliminates some of the drawbacks of traditional server virtualization. The off-box network virtualization technology is used in both bare metal instances (i.e. physical servers dedicated to a single customer) and VM instances.

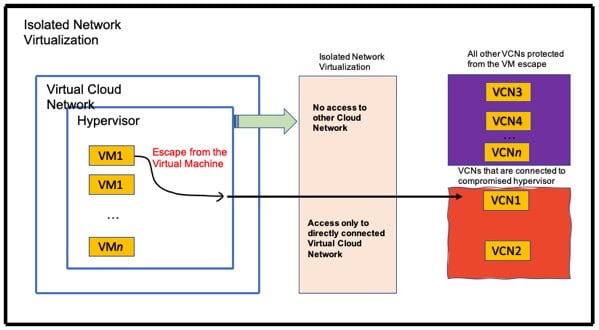

The isolated network virtualization helps limit the attacker surface to only a VCN (Virtual Cloud Network) connected to the hypervisor by the control plane. OCI moves the trust from the hypervisor to the isolated network virtualization, which is implemented outside of the hypervisor. To understand how this works, take a look at the diagram below:

Bare metal is a unique feature of cloud computing where no hypervisor is needed to run resource virtualization for the network and I/O. In the Oracle Cloud Infrastructure (OCI), network virtualization is moved into the infrastructure, resulting in significant improvements in performance and security. The traditional virtualization overhead in the hypervisor is eliminated with this approach.

OCI uses a coprocessor in the infrastructure to perform network virtualization, which allows the hypervisor to focus on other virtualization tasks. By offloading network virtualization, a number of traditional hypervisor security risks are eliminated, creating a security boundary between the hypervisor running on one host and the virtual networks of other VMs running on other hosts.

OCI designed this solution to reduce the attack surface and potential damage that an attacker might cause in the event of a compromised hypervisor.

Moreover, OCI has also implemented additional layers of network isolation to prevent malicious actors from sending unauthorized network traffic, even if the attacker breaks through the first line of defense that is provided by off-box network virtualization.

For bare metal instances, off-box network virtualization provides a security boundary for the virtual network, preventing an attacker on a bare metal instance from accessing the virtual networks of other bare metal instances and VMs running on other hypervisors.

Fault domains

OCI has implemented a highly available infrastructure by distributing resources across regions and Availability Domains (ADs). A region is a geographically dispersed area containing one or more ADs. During the OCI deployment process, Oracle established multiple ADs within a single region, including Ashburn, Phoenix, Frankfurt, and the UK. These ADs are individual data centers that are geographically separated but located within the same region.

To further enhance availability, OCI created fault domains to isolate physical hardware within an AD. A fault domain is a group of rack hardware that has been physically separated within an AD. Each AD contains three fault domains, and you can choose which one to use for your cloud resources, ensuring high availability even with only one AD in a region. Fault domains provide anti-affinity rules for your cloud resources, and the physical hardware in a fault domain has redundant power supplies to improve availability. You can check out a high-level logical diagram of the physically separated fault domain structure within a single AD here:

Fault domains are determined by the racks of compute resources within an Availability Domain. All resources that share a rack belong to the same fault domain. Resources belonging to different fault domains cannot coexist on the same rack. Customers have the option to select the fault domain in which they want to create their resources. This selection is randomly mapped to a physical fault domain per tenancy in order to prevent uneven usage of fault domains.

Leave a Reply